In the rapidly evolving field of artificial intelligence, the development of large language models (LLMs) has garnered significant attention. With a tech giant like OpenAI and a leading proprietary model such as ChatGPT, the question arises: Can an open-source LLM effectively compete? Let’s explore the open-source LLMs’ capabilities, challenges, and potential in the AI landscape.

What is an Open-Source LLM?

An open-source LLM refers to a language model developed with freely accessible code and data, allowing developers worldwide to contribute, modify, and distribute their improvements. These models are essential tools in natural language processing, enabling applications from chatbots to advanced analytical tools. Examples of some open-source LLMs include Google Gemma and Mistral, with the recently released META’s Llama 3.1 also making headlines due to its impressive results.

Competing with Giants: The OpenAI and Google Models

The landscape of artificial intelligence has been significantly shaped by closed models like GPT from OpenAI and Google’s BERT. These models have set high standards in terms of performance and innovation, benefiting from substantial investment and years of research.

Can Open Source AI Models Catch Up?

Despite the formidable lead of these models, open-source LLMs are rapidly advancing. The open-source community is making strides in AI development, though it lacks extensive resources and to a certain extent talent. The cost to train GPT-4 was around $63 million, primarily for server costs, highlighting the financial challenges for open-source projects.

To compete effectively, open-source models would need significant external support, both financial and computational. Partnerships with corporations like META, which supports projects such as Llama, demonstrate how crucial corporate sponsorship is in scaling these technologies.

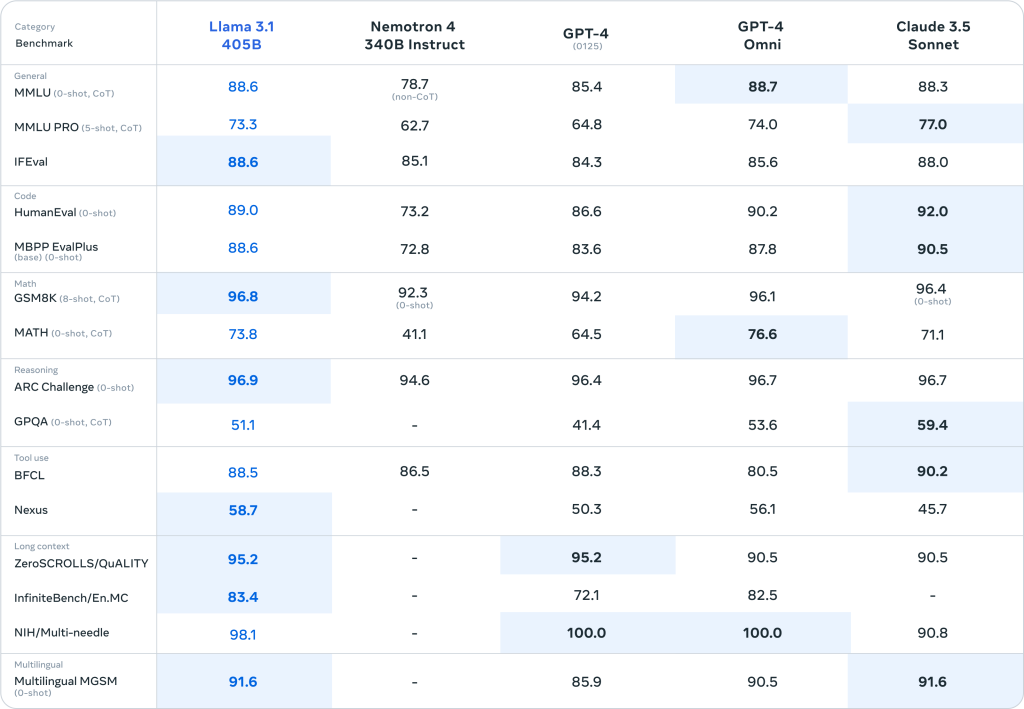

Recently, META released the Llama 3.1 models in 405B, 8B, and 70B configurations, which feature extended context lengths and enhanced reasoning capabilities for complex tasks such as long-form text summarization and coding assistance. The feedback has been positive, indicating that open-source LLMs are rapidly catching up with the advantages and benefits of the top-tier LLMs. We will have to wait and see if this trend continues.

Main Challenges for Open-Source LLMs

Resource Limitations: One of the most significant hurdles for open-source LLMs is the vast amount of resources required for training advanced models. Without the financial backing that other models enjoy, these open-source projects often struggle to reach the same level of sophistication and performance.

Development and Scaling: Scaling an open-source LLM requires not just financial investment but also a collaborative effort from the global tech community. Ensuring consistent and valuable contributions from across the world can be problematic without a centralized strategy.

Access to Talent: Although the open-source community is rich with talent, coordinating these efforts to maximize efficiency and innovation often lags behind more structured corporate development environments.

Security Note: Implementing AI solutions, whether open-source or proprietary, must also consider security measures to protect data integrity and user privacy. If security and privacy are as important as it is to us, consider Team-GPT Enterprise for your Enterprise AI solution.

Role of Corporate Sponsorship and Collaboration

The involvement of corporations in open-source AI projects is more than just a funding boost—it’s a strategic collaboration that can provide essential resources, expertise, and infrastructure. Companies like META are pivotal in advancing open-source AI by providing computational power and data access, which are critical for training more sophisticated models.

Open-source generative AI models

It’s important to mention the rise of the free and open-source generative AI model, Stable Diffusion. Their effort in democratizing generative AI for maximum accessibility has proven its worth compared to closed models like Midjourney and DALL-E. We recently wrote an article on the matter of which AI image generator is best to use in 2024, and Stable Diffusion is a solid choice.

What to expect next

The road ahead involves not just improvements in technology but also promoting stronger collaborations and securing more substantial investments. The open-source community continues to push the boundaries, driven by a collective commitment to accessible and transparent AI development.

Iliya Valchanov

Iliya teaches 1.4M students on the topics of AI, data science, and machine learning. He is a serial entrepreneur, who has co-founded Team-GPT, 3veta, and 365 Data Science. Iliya’s latest project, Team-GPT is helping companies like Maersk, EY, Charles Schwab, Johns Hopkins University, Yale University, Columbia University adopt AI in the most private and secure way.