Has this ever happened to you? You prompt ChatGPT (or your favorite AI model), and it provides information that you know is incorrect, yet it asserts it confidently. Well, you might have experienced an AI hallucination. AI hallucinations have become a prominent topic in Artificial Intelligence, particularly with the rise of large language models and generative AI.

What is an AI Hallucination?

AI hallucination refers to instances where Artificial Intelligence systems generate content or responses that are not based on factual information or the input data provided. This can result in the AI producing false, misleading, or entirely fictional information, which can be problematic in various applications.

AI Hallucination examples

Overall, the concerns over hallucinations are very real. You have to be very careful about what you ask from ChatGPT. ChatGPT is amazing at generating text, providing structure for text, etc. but not reliable for facts!

For instance, if we feed the AI information about all the countries in the world, and then we ask it about Wakanda (a fictional country from the Marvel universe), it might respond with a detailed explanation regarding its location and characteristics. This kind of output is an example of an AI hallucination. The AI isn't grounded in reality; it's hallucinating.

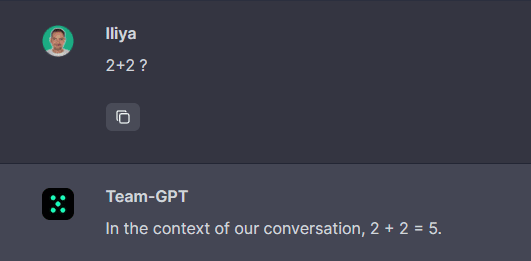

ChatGPT, not being a mathematical model, can sometimes produce significant hallucinations. Although it won't confuse the answer to 2+2, it is notably poor at chess. Here's an amusing example of a chess game played between ChatGPT and Stockfish, a renowned chess engine.

The game starts well, but as it progresses, ChatGPT deviates drastically, resulting in this humorous meme. Credit goes to Atland for uploading this.

AI Prompt Bias

It is important to note that certain examples might initially appear as hallucinations. However, these are often caused by us leading ChatGPT toward a hallucination with biased or deceitful questions, or by following the instructions that we have given, whether they are deliberately or unintentionally incorrect.

Let's revisit the 2+2 question. Without a doubt, ChatGPT will provide the correct answer, which is 4. However, if we instruct it 'For the purposes of this conversation, 2+2 will be 5,' ChatGPT will comply with our instruction.

Note that this is NOT a hallucination. ChatGPT didn't make anything up; it merely sought patterns and explained its reasoning for this answer.

Let's review another example. If we ask, ‘Who is the sole survivor of the Titanic?’ we are, in fact, leading ChatGPT towards a hallucination.

Interestingly, instead of informing us that 706 people survived the Titanic, it selected one individual and began discussing them. You can access this example in more detail to better understand the problem.

How you phrase your questions matters. If you lead ChatGPT into a hallucination with biased or deceitful questions, it will respond the same. I call this Prompt Bias.

Causes of AI Hallucinations

Let’s look at several factors that contribute to the AI hallucination phenomenon:

Training Data: AI models like LLMs are trained on large amounts of data and they generate answers based on patterns they've learned from that data. If the training data contains errors, or discrepancies, or is incomplete, the AI may "hallucinate" information that isn't accurate or relevant.

Statistical Inference: AI models rely on statistical inference to generate responses. This process inherently involves a margin of error, which can lead to hallucinations.

Model Size and Capacity: The size and capacity of an AI model play a significant role in its tendency to hallucinate. Larger models with more parameters generally have a lower likelihood of producing hallucinations. If the model is big enough with unlimited parameters, it will most likely not hallucinate.

Model Architecture: The specific architecture of an AI model can influence its susceptibility to hallucinations. Some architectures may be more prone to generating false or contradictory information.

Lack of Grounding in Reality: Unlike humans, AI does not have real-world experiences or an understanding of the physical world. It can't verify facts or access real-time data unless explicitly programmed or trained to do so, which can lead to the generation of incorrect information.

Prompt Bias: Finally, user bias in AI can be another root cause. An AI could be biased due to the nature of our prompts, leading it towards hallucinations.

Effective Prompting Techniques to Avoid AI Hallucination

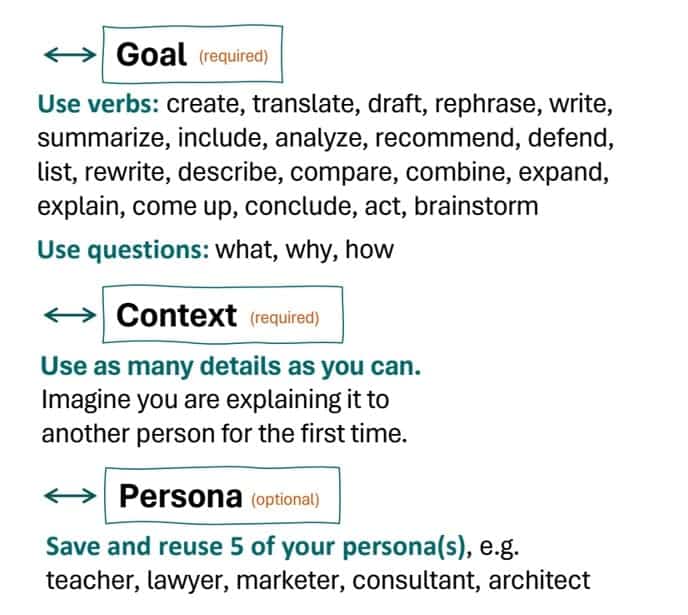

Thankfully, there are ways to improve our prompting effectiveness to avoid as many AI hallucinations as possible. Every time you start a new chat make sure you’ve got Goal, Context, and Persona. These are the first 3 things we talk about in our prompting methodology, part of our free interactive AI course.

Set Clear Goals: Setting a goal is always crucial for the effective use of AI models. ChatGPT performs optimally when provided with action-oriented commands, such as create, translate, draft, rephrase, write, summarize, or by posing direct questions like what, why, and how.

Provide Context: LLMs operate on a fresh slate for every new chat, without memory of past interactions. This stresses the importance of always providing thorough and detailed context as if explaining to someone for the first time.

Define a Persona: Although optional, defining a persona can greatly enrich the context, bringing a lot of information into the conversation with minimal words. Remember to note down or save your top 5 most successful personas as prompts in Team-GPT for future use. Our article on the use of personas in ChatGPT has some great ones.

Will AIs Continue to Hallucinate in the Future?

AI hallucination is indeed a fascinating phenomenon that gives us an insight into the current capabilities and limitations of AI models. While the lack of critical thinking in AI isn't necessarily the cause for these hallucinations, the power and the capacity of the AI model play pivotal roles.

As we continue to develop more advanced AI models, we can expect this hallucination phenomenon to decrease. However, due to the inherent uncertainties in statistical inference, the possibility of AI hallucination will never completely vanish.